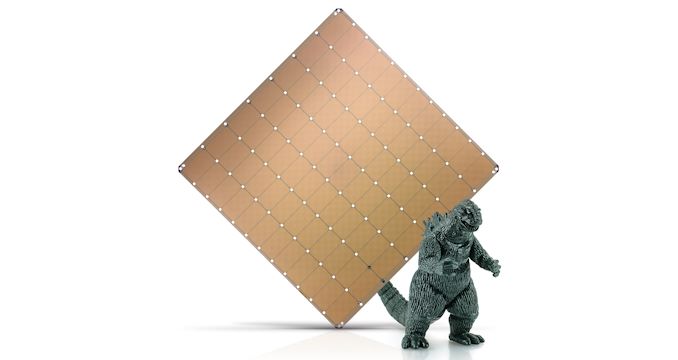

the Cerebras AI 2.6T 250M 4B is the largest chip ever built, boasting an impressive 2.6 trillion transistors. This massive scale allows it to deliver unparalleled processing power, enabling AI models to train and infer at unprecedented speeds. With 250 million AI-optimized compute cores, this chip can handle complex neural networks with ease, significantly reducing training times and improving overall performance.

Furthermore, the Cerebras AI 2.6T 250M 4B chip incorporates a whopping 4 billion SRAM memory cells. This extensive memory capacity enables the chip to store vast amounts of data, facilitating faster access and reducing the need for external memory transfers. As a result, AI models can process large datasets more efficiently, leading to quicker insights and enhanced decision-making capabilities.

Efficiency at Scale

Despite its immense power, the Cerebras AI 2.6T 250M 4B chip is designed with efficiency in mind. By integrating compute cores and memory cells into a single chip, Cerebras eliminates the need for data movement between multiple chips, reducing latency and energy consumption. This streamlined architecture allows for faster computations while minimizing power requirements, making it an ideal solution for energy-conscious AI applications.

Moreover, the Cerebras AI 2.6T 250M 4B chip employs advanced packaging technology to optimize cooling and power delivery. Its innovative water-cooling system ensures efficient heat dissipation, preventing thermal throttling and maintaining peak performance even under heavy workloads. This thermal management solution enables sustained high-performance computing, making the chip suitable for demanding AI tasks that require extended periods of processing.

Accelerating AI Research and Development

The Cerebras AI 2.6T 250M 4B chip has the potential to revolutionize AI research and development. Its immense computational power and memory capacity enable researchers to train larger and more complex models, unlocking new possibilities in AI applications. With reduced training times, researchers can iterate and experiment more rapidly, accelerating the pace of innovation in the field.

Furthermore, the Cerebras AI 2.6T 250M 4B chip’s efficiency allows for cost-effective scaling of AI infrastructure. By consolidating compute and memory resources into a single chip, organizations can reduce their hardware footprint and energy consumption while achieving higher performance. This scalability opens up opportunities for businesses to deploy AI solutions at a larger scale, driving advancements in various industries such as healthcare, finance, and autonomous systems.

Paving the Way for Future AI Breakthroughs

The Cerebras AI 2.6T 250M 4B chip represents a significant milestone in AI hardware development. Its groundbreaking architecture and unprecedented scale push the boundaries of what is possible in AI computing. As the demand for AI continues to grow, chips like the Cerebras AI 2.6T 250M 4B will play a crucial role in enabling more advanced and sophisticated AI applications.

In conclusion, the Cerebras AI 2.6T 250M 4B chip is a game-changer in the field of AI. With its immense processing power, extensive memory capacity, and energy-efficient design, this chip has the potential to revolutionize AI research and development. By enabling faster training times, cost-effective scaling, and breakthrough innovations, the Cerebras AI 2.6T 250M 4B chip paves the way for a future where AI is more capable and accessible than ever before.